If you are a VTuber fan, you may have noticed a surge of suspicious voice recordings on your feed over the last few days. New developments in AI voice synthesizers have given people the ability to reproduce shockingly accurate spoken recordings by only providing a few 30 to 60-second samples of a person’s speech.

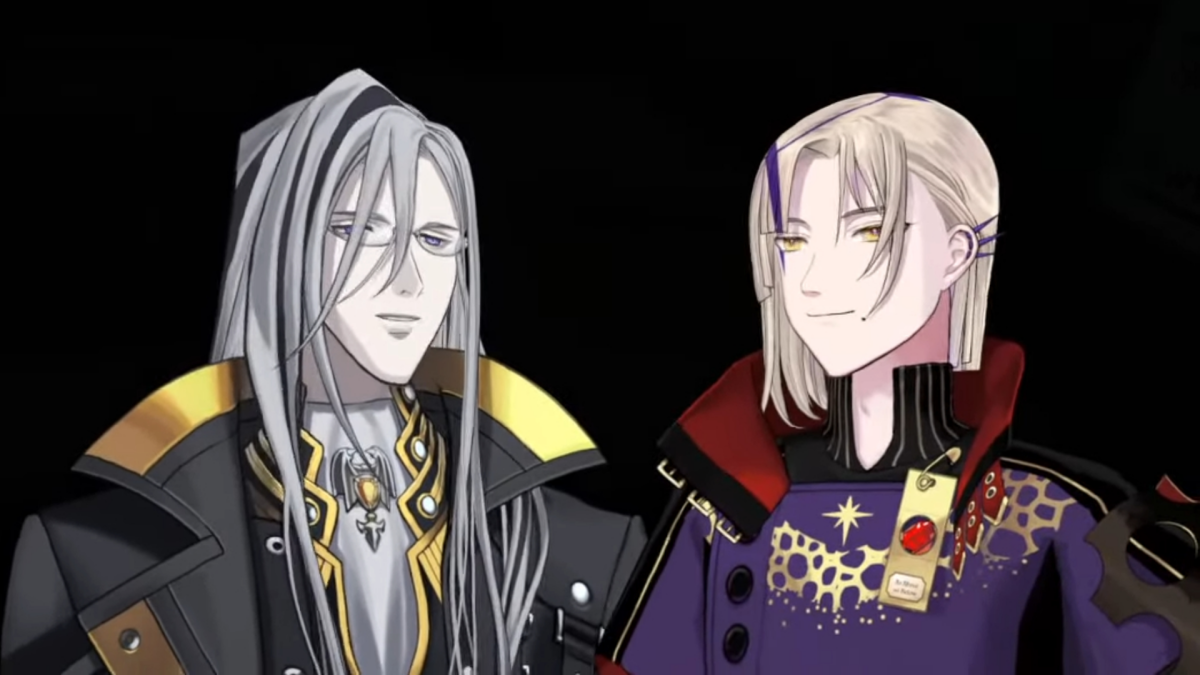

In one example, a clip featured AI versions of the voices of Holostars Magni Dezmond and Noir Vesper having what sounded like a couple’s dispute over a pregnancy. While satirical, other AI recordings of this nature played off unfounded rumors and served to mock the talents who the original voices belong to.

The wave of AI conversations started after startup AI developer ElevenLabs opened its beta testing to the public over the last few days. The platform exploded in popularity almost immediately over the weekend, and with it spiked the cases of misuse, which the developers immediately flagged.

In response, on Jan. 31, ElevenLabs acknowledged the malicious activities many claimed have been enabled by its web app. The startup developer said it intends to combat this by releasing its audio clip trace-back tool to the public, which can supposedly be used to identify clips created by the AI. ElevenLabs claims this feature will be released next week.

Its second strategy is to limit its VoiceLab tool to paid users, which is the feature that allows users to clone a voice using only a few short samples. ElevenLabs claimed, “almost all of the malicious content was generated by free, anonymous accounts.”

VTubers in particular have been prime targets for malicious activity. Explicit recordings made the rounds on Twitter over the last few days, with some even garnering hundreds of thousands of impressions, as well as other sites.

These ranged from Hololive and NIJISANJI talents’ voices—recreated through the AI—reciting copypastas to more sexually explicit sentences and phrases where the stars would ‘submit’ to listeners.

Others included references to bestiality and the voices of VTuber stars reciting racist phrases. Dot Esports has chosen not to embed any of these recordings.

Communities have already formed around using ElevenLab’s AI tools maliciously, sharing and aiding with the creation of explicit voice recordings.

The VTubers have no say in how their voices are being exploited, what they are being imitated to say, or how it is spread around. In most cases, their voices were sampled to create content without their knowledge or consent.

The phenomenon sweeping the VTuber community shares similarities with the current outbreak of AI deepfake content of female streamers.

There is currently a lot of speculation among the community regarding the legality of training AI with the voices of agency VTubers. In its guidelines for musical derivative works, Hololive states it “[does] not allow extraction of talent’s voices from songs to be used in speech generation, and do not consider this to be derivative work.” Fans are wondering whether this policy would extend to training speech generation with samples outside of the VTubers’ music.

A discussion on the topic was posted to the official Hololive subreddit but has since been removed. The post warned users of the new AI and its ability to clone a voice accurately with only a small sample, and suggested users not believe anything they may hear the talents say over the next couple of days. The thread reached over 1,700 upvotes and prompted 270 comments on the topic.

It is unclear what the long-term consequences of this technology are. AI art took the VTuber community by storm in October 2022 and caused ongoing concern about art and the ethics of AI. At the time, AI VTuber Neuro Sama faced a ban early into January over denying the Holocaust.

This new introduction of voice AI only compounds the existing issues.

Published: Feb 1, 2023 02:25 am